Creating a plugin Macro component#

Prerequisites#

Dataiku >= 12.0

Access to a dataiku instance with the “Develop plugins” permissions

- Access to an existing project with the following permissions:

“Read project content.”

“Write project content.”

Introduction#

Macros in Dataiku can achieve many different roles, from automating tasks to extending the core product’s capabilities.

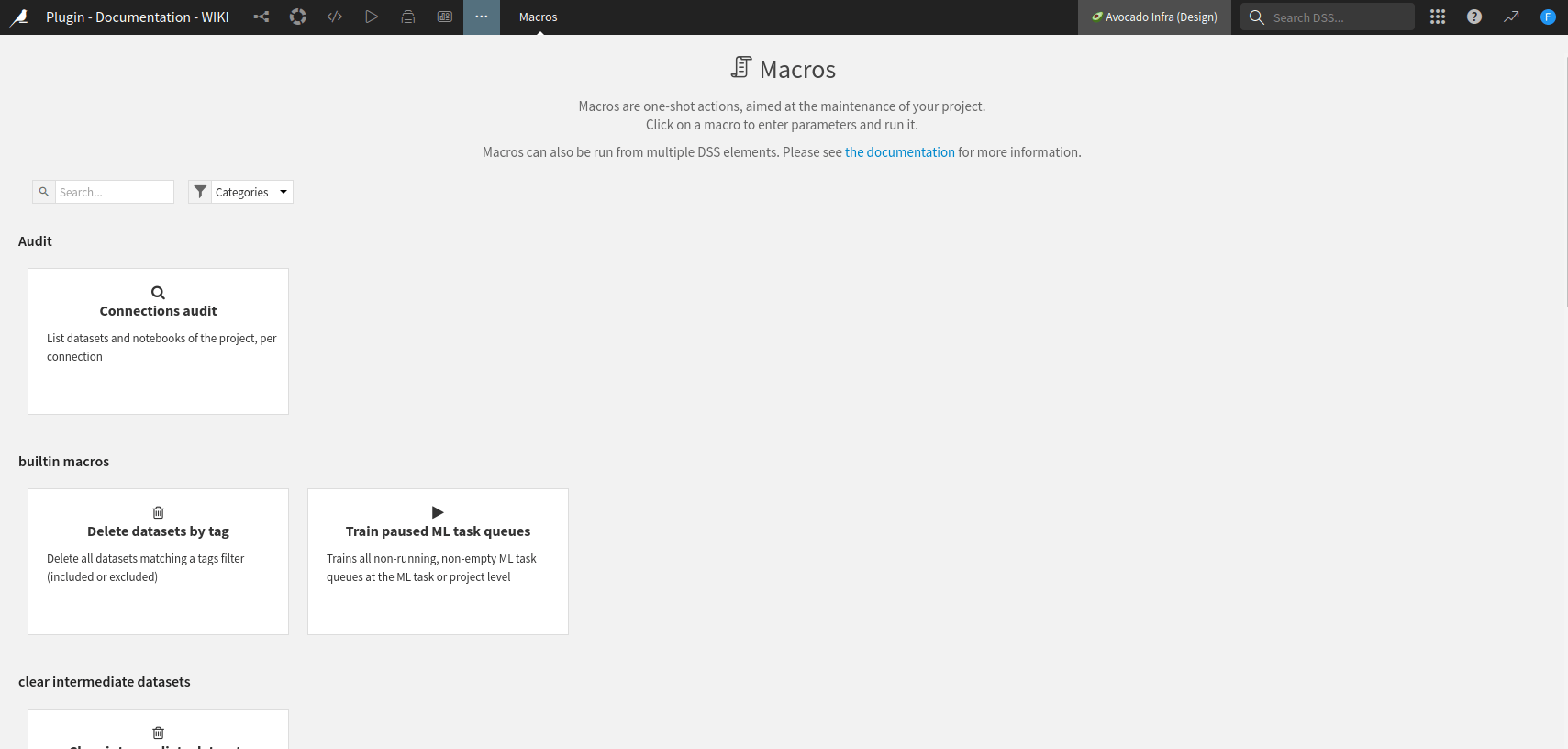

Usually, macros are found in the Macro menu under the More options menu, as shown

in Fig. 1.

If your macro has a specific macroRoles, it can appear in other places depending on the role.

It’s worth noting that a macro isn’t restricted to a particular role and can have several roles if appropriate.

Figure 1: Creation of a new macro.#

A macro can be run in several contexts:

Manually, by clicking on the macro’s name in the project’s macros screen (Fig. 1).

In a dashboard with pre-configured parameters for dashboard users.

In a scenario.

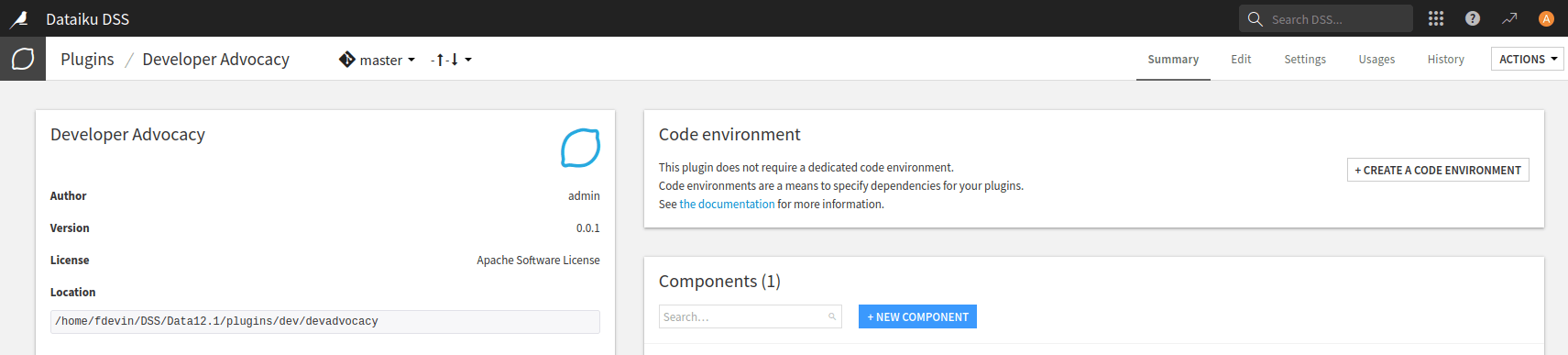

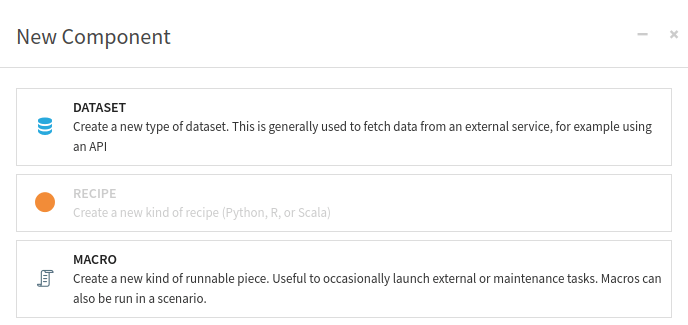

You can find some examples Macros. To create a macro, go to the plugin editor, click the + New component button (Fig. 2), and choose the macro component (Fig. 3).

Figure 2: New component#

Figure 3: New macro component#

This will create a subfolder named python-runnables in your plugin directory.

Within this subfolder, a subfolder with the name of your macro will be created.

You will find two files in the subfolder: runnable.json and runnablie.py.

The runnable.json file is used for configuring your macro, while the runnable_py file is used for processing.

Macro configuration#

Code 1 shows the default configuration file generated by

Dataiku.

The file includes standard objects like "meta", "params", and "permissions",

which are expected for all components.

For more information about these generic objects, please refer to Component: Macros.

/* This file is the descriptor for the python runnable set-up-a-project */

{

"meta": {

// label: name of the runnable as displayed, should be short

"label": "Custom runnable set-up-a-project",

// description: longer string to help end users understand what this runnable does

"description": "",

// icon: must be one of the FontAwesome 3.2.1 icons, complete list here at https://fontawesome.com/v3.2.1/icons/

"icon": "icon-puzzle-piece"

},

/* whether the runnable's code is untrusted */

"impersonate": false,

/* params:

Dataiku will generate a formular from this list of requested parameters.

Your component code can then access the value provided by users using the "name" field of each parameter.

Available parameter types include:

STRING, INT, DOUBLE, BOOLEAN, DATE, SELECT, TEXTAREA, DATASET, DATASET_COLUMN, MANAGED_FOLDER, PRESET and others.

For the full list and for more details, see the documentation: https://doc.dataiku.com/dss/latest/plugins/reference/params.html

*/

"params": [

{

"name": "parameter1",

"label": "User-readable name",

"type": "STRING",

"description": "Some documentation for parameter1",

"mandatory": true

},

{

"name": "parameter2",

"label": "parameter2",

"type": "INT",

"defaultValue": 42

/* Note that standard json parsing will return it as a double in Python (instead of an int), so you need to write

int(self.config()['parameter2'])

*/

},

/* A "SELECT" parameter is a multi-choice selector. Choices are specified using the selectChoice field*/

{

"name": "parameter8",

"label": "parameter8",

"type": "SELECT",

"selectChoices": [

{

"value": "val_x",

"label": "display name for val_x"

},

{

"value": "val_y",

"label": "display name for val_y"

}

]

}

],

/* list of required permissions on the project to see/run the runnable */

"permissions": [],

/* what the code's run() returns:

- NONE : no result

- HTML : a string that is a html (utf8 encoded)

- FOLDER_FILE : a (folderId, path) pair to a file in a folder of this project (json-encoded)

- FILE : raw data (as a python string) that will be stored in a temp file by Dataiku

- URL : a url

*/

"resultType": "HTML",

/* label to use when the runnable's result is not inlined in the UI (ex: for urls) */

"resultLabel": "my production",

/* for FILE resultType, the extension to use for the temp file */

"extension": "txt",

/* for FILE resultType, the type of data stored in the temp file */

"mimeType": "text/plain",

/* Macro roles define where this macro will appear in Dataiku. They are used to pre-fill a macro parameter with context.

Each role consists of:

- type: where the macro will be shown

* when selecting Dataiku object(s): DATASET, DATASETS, API_SERVICE, API_SERVICE_VERSION, BUNDLE, VISUAL_ANALYSIS, SAVED_MODEL, MANAGED_FOLDER

* in the global project list: PROJECT_MACROS

- targetParamsKey(s): name of the parameter(s) that will be filled with the selected object

*/

"macroRoles": [

/* {

"type": "DATASET",

"targetParamsKey": "input_dataset"

} */

]

}

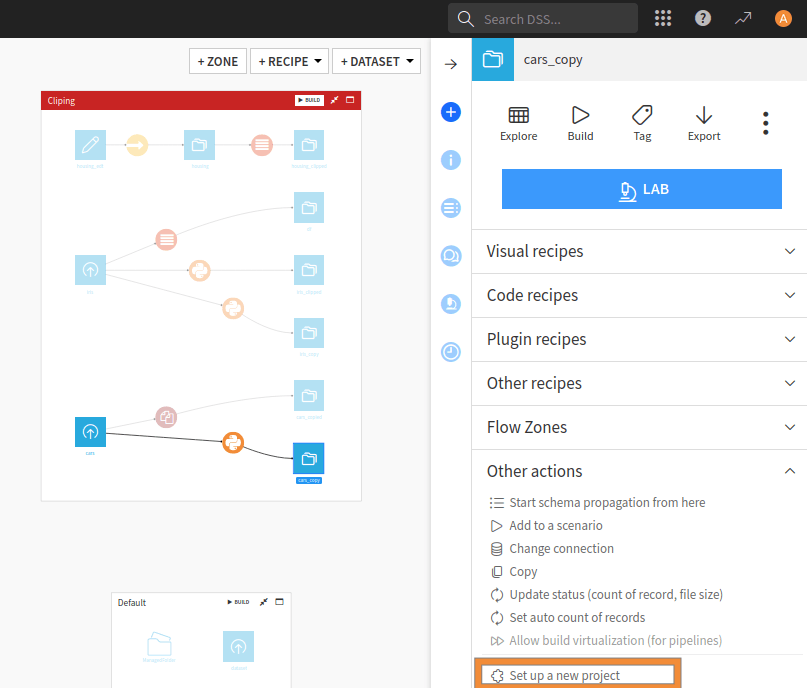

The "macroRoles" object is where you can specify where you want your macro to appear in Dataiku.

For instance, if you opt for “DATASET,” your macro will be visible when you choose a dataset from the flow,

as demonstrated in Fig. 4.

There are various roles available for macros, such as:

DATASET, DATASETS, API_SERVICE, API_SERVICE_VERSION, BUNDLE, VISUAL_ANALYSIS, SAVED_MODEL, MANAGED_FOLDER: for a macro that runs on that particular object (usually as an input of the macro).

PROJECT_MACROS: for a global macro that works on the project or Dataiku instance, depending on the processing.

PROJECT_CREATOR: for defining a new type of project’s creation, setting up specific permissions, a set of usable datasets, and a dedicated Code environment, etc.

Figure 4: UI integration for a "macroRoles": DATASET#

Finally, "resultType" is the return type of the macro. It can take one of those values:

HTML: produces an HTML (string) as a report (for example).FILE: creates raw data (as a Python string) and will be stored in a temp file.FOLDER_FILE: same as FILE, except it will be stored in a project folder.URL: produces an URL.RESULT_TABLE: produces a tabular result, adequately formatted for display.NONE: the macro does not produce output.

We recommend that you use RESULT_TABLE rather than HTML if the output of your macro is a simple table,

as you won’t have to handle styling and formatting.

Associated to resultType, resultLabel,

extension and mimeType are options to specify which output the macro will produce.

Macro execution#

Code 2 shows the default generated code by Dataiku for the macro’s execution, divided into three distinct parts. These parts are designed to help you get started quickly and include:

__init__: This is an initialization function where you can parse the parameters entered by the user, read the plugin configuration, and do other tasks that are not directly linked to the processing. This function can help you set up the groundwork for your macro processing.get_progress_target: If your macro produces some progress information, you should define the final state in this function. Usually, it returns a simple tuple, where the first parameter is the number of steps, and the second is a unit like SIZE, FILES, RECORDS, or NONE. Depending on your macro, you can use SIZE when uploading a file, FILES when reading different files, RECORDS when processing a list of records, and NONE if you want to return global progress. This function helps you track progress and keep your macro processing running smoothly.run: This is where you will write the code for processing your macro. Depending on the macro’s type, the processing could take various forms.

# This file is the actual code for the Python runnable set-up-a-project

from dataiku.runnables import Runnable

class MyRunnable(Runnable):

"""The base interface for a Python runnable"""

def __init__(self, project_key, config, plugin_config):

"""

:param project_key: the project in which the runnable executes

:param config: the dict of the configuration of the object

:param plugin_config: contains the plugin settings

"""

self.project_key = project_key

self.config = config

self.plugin_config = plugin_config

def get_progress_target(self):

"""

If the runnable will return some progress info, have this function return a tuple of

(target, unit) where unit is one of: SIZE, FILES, RECORDS, NONE

"""

return None

def run(self, progress_callback):

"""

Do stuff here. Can return a string or raise an exception.

The progress_callback is a function expecting 1 value: current progress

"""

raise Exception("unimplemented")

Simple example#

In this scenario you create a macro that copies a set of datasets, only SQL datasets, and upload datasets. This macro needs to ask the user about the datasets they want to copy and which suffix to add to the copies. The macro also needs to display the result of the copy. So you will choose a “RESULT_TABLE” as “resultType.” That leads to the configuration shown in Code 3.

{

"meta": {

"label": "Copy datasets",

"description": "Allow to copy multiple datasets in one action. You can copy only SQL and uploaded datasets.",

"icon": "icon-copy"

},

"params": [

{

"name": "datasets",

"label": "User-readable name",

"type": "DATASETS",

"description": "Some documentation for parameter1",

"mandatory": true

},

{

"name": "text",

"label": "parameter2",

"type": "STRING",

"defaultValue": "_copy",

"mandatory": true

}

],

"impersonate": false,

"permissions": [],

"resultType": "RESULT_TABLE",

"macroRoles": [

{

"type": "DATASETS",

"targetParamsKey": "datasets"

}

]

}

The process shown in Code 4 is as follows:

Get the required information from the user in the

__init__function.Copy the datasets in the run function.

The highlighted lines in the code indicate the lines responsible for generating the output report of the macro.

# This file is the actual code for the Python runnable set-up-a-project

from dataiku.runnables import Runnable, ResultTable

import dataiku

class MyRunnable(Runnable):

"""The base interface for a Python runnable"""

def __init__(self, project_key, config, plugin_config):

"""

:param project_key: the project in which the runnable executes

:param config: the dict of the configuration of the object

:param plugin_config: contains the plugin settings

"""

self.project_key = project_key

self.plugin_config = plugin_config

self.datasets = config.get('datasets',[])

self.text = config.get('text', '_copy')

self.client = dataiku.api_client()

self.project = self.client.get_default_project()

def get_progress_target(self):

"""

If the runnable will return some progress info, have this function return a tuple of

(target, unit) where unit is one of: SIZE, FILES, RECORDS, NONE

"""

return (len(self.datasets), 'NONE')

def run(self, progress_callback):

"""

Do stuff here. Can return a string or raise an exception.

The progress_callback is a function expecting 1 value: current progress

"""

rt = ResultTable()

rt.add_column("1", "Original name", "STRING")

rt.add_column("2", "Copied name", "STRING")

for index, name in enumerate(self.datasets):

record = []

progress_callback(index+1)

record.append(name)

try:

dataset = self.project.get_dataset(name)

settings = dataset.get_settings().get_raw()

params = settings.get('params')

table = params.get('table','')

path = params.get('path')

if table:

params['table'] = "${projectKey}/" + name + self.text

if path:

params['path'] = "${projectKey}/" + name + "__copy_from_notebook"

copy = self.project.create_dataset(name + self.text,

settings.get('type'),

params,

settings.get('formatType'),

settings.get('formatParams')

)

f = dataset.copy_to(copy, True, 'OVERWRITE')

f.wait_for_result()

record.append("copied to " + name + self.text)

# Need better error handling

except Exception:

record.append("An error occured.")

rt.add_record(record)

return rt

Wrapping up#

Congratulations! You have completed this tutorial and built your first macro. Understanding all these basic concepts will allow you to create more complex macros.

To go further, instead of copying datasets you could extract some information (like associated tags) from those datasets and display them in a table or HTML.

Here is the complete version of the code presented in this tutorial:

runnable.json

{

"meta": {

"label": "Copy datasets",

"description": "Allow to copy multiple datasets in one action. You can copy only SQL and uploaded datasets.",

"icon": "icon-copy"

},

"params": [

{

"name": "datasets",

"label": "User-readable name",

"type": "DATASETS",

"description": "Some documentation for parameter1",

"mandatory": true

},

{

"name": "text",

"label": "parameter2",

"type": "STRING",

"defaultValue": "_copy",

"mandatory": true

}

],

"impersonate": false,

"permissions": [],

"resultType": "RESULT_TABLE",

"macroRoles": [

{

"type": "DATASETS",

"targetParamsKey": "datasets"

}

]

}

runnable.py

# This file is the actual code for the Python runnable set-up-a-project

from dataiku.runnables import Runnable, ResultTable

import dataiku

class MyRunnable(Runnable):

"""The base interface for a Python runnable"""

def __init__(self, project_key, config, plugin_config):

"""

:param project_key: the project in which the runnable executes

:param config: the dict of the configuration of the object

:param plugin_config: contains the plugin settings

"""

self.project_key = project_key

self.plugin_config = plugin_config

self.datasets = config.get('datasets',[])

self.text = config.get('text', '_copy')

self.client = dataiku.api_client()

self.project = self.client.get_default_project()

def get_progress_target(self):

"""

If the runnable will return some progress info, have this function return a tuple of

(target, unit) where unit is one of: SIZE, FILES, RECORDS, NONE

"""

return (len(self.datasets), 'NONE')

def run(self, progress_callback):

"""

Do stuff here. Can return a string or raise an exception.

The progress_callback is a function expecting 1 value: current progress

"""

rt = ResultTable()

rt.add_column("1", "Original name", "STRING")

rt.add_column("2", "Copied name", "STRING")

for index, name in enumerate(self.datasets):

record = []

progress_callback(index+1)

record.append(name)

try:

dataset = self.project.get_dataset(name)

settings = dataset.get_settings().get_raw()

params = settings.get('params')

table = params.get('table','')

path = params.get('path')

if table:

params['table'] = "${projectKey}/" + name + self.text

if path:

params['path'] = "${projectKey}/" + name + "__copy_from_notebook"

copy = self.project.create_dataset(name + self.text,

settings.get('type'),

params,

settings.get('formatType'),

settings.get('formatParams')

)

f = dataset.copy_to(copy, True, 'OVERWRITE')

f.wait_for_result()

record.append("copied to " + name + self.text)

# Need better error handling

except Exception:

record.append("An error occured.")

rt.add_record(record)

return rt