Creating a Dash application using an LLM-based agent#

In this tutorial, you will learn how to build an LLM-based agent application using Dash. You will build an application to retrieve customer and company information based on a login. This tutorial relies on two tools. One tool retrieves a user’s name, position, and company based on a login. This information is stored in a Dataset. A second tool searches the Internet to find company information.

This tutorial is based on the Building and using an agent with Dataiku’s LLM Mesh and Langchain tutorial. It uses the same tools and agents in a similar context. If you have followed this tutorial, you can jump to the Creating the Dash application section.

Prerequisites#

Administrator permission to build the template

An LLM connection configured

A Dataiku version > 12.6.2

A code environment (named

dash_GenAI) with the following packages:dash dash-bootstrap-components langchain==0.2.0 duckduckgo_search==6.1.0

Creating the Agent application#

Preparing the data#

You need to create the associated dataset, as you will use a dataset that stores a user’s ID, name, position, and company based on that ID.

id |

name |

job |

company |

|---|---|---|---|

tcook |

Tim Cook |

CEO |

Apple |

snadella |

Satya Nadella |

CEO |

Microsoft |

jbezos |

Jeff Bezos |

CEO |

Amazon |

fdouetteau |

Florian Douetteau |

CEO |

Dataiku |

wcoyote |

Wile E. Coyote |

Business Developer |

ACME |

Table 1, which can be downloaded here,

represents such Data.

Create an SQL Database named pro_customers_sql by uploading the CSV file

and using a Sync recipe to store the data in an SQL connection.

LLM initialization and library import#

Be sure to have a valid LLM ID before creating your Gradio application.

The documentation provides instructions on obtaining an LLM ID.

Create a new webapp by clicking on </> > Webapps.

Click the +New webapp, choose the Code webapp, then click on the Dash button, choose the An empty Dash app option, and choose a meaningful name.

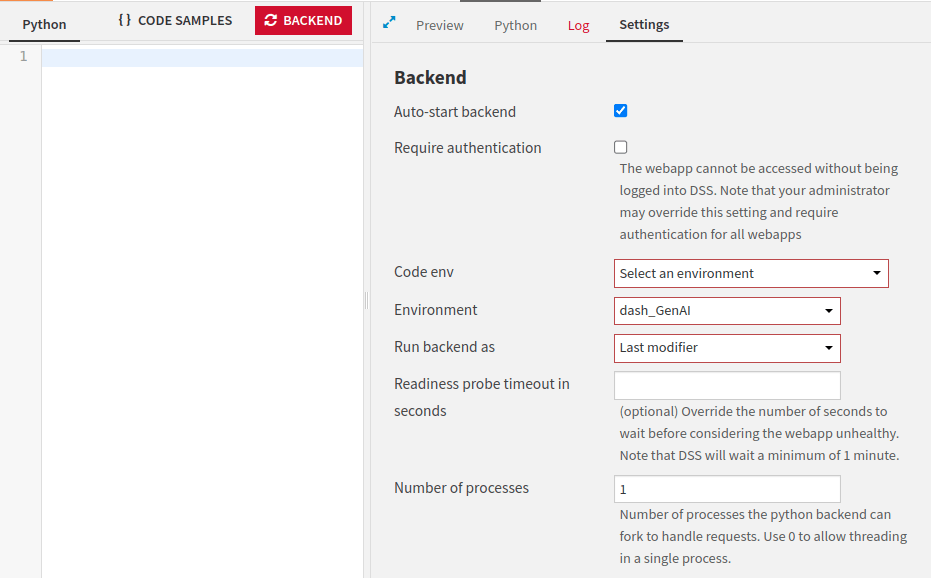

Go to the Settings tabs, select the

dash_GenAIcode environment for the Code env option, and remove the code from the Python tab, as shown in Figure 1.

Figure 1: Dash settings.#

To begin with, you need to set up a development environment by importing some necessary libraries

and initializing the chat LLM you want to use to create the agent.

The tutorial relies on the LLM Mesh for this and the Langchain package to orchestrate the process.

The DKUChatModel class allows you to call a model previously registered in the LLM Mesh

and make it recognizable as a Langchain chat model for further use.

Code 1 shows how to do and import the needed libraries.

from dash import html

from dash import dcc

import dash_bootstrap_components as dbc

from dash.dependencies import Input

from dash.dependencies import Output

from dash.dependencies import State

from dash import no_update

from dash import set_props

import dataiku

from dataiku.langchain.dku_llm import DKUChatModel

from dataiku import SQLExecutor2

from dataiku.sql import Constant, toSQL, Dialects

from duckduckgo_search import DDGS

from langchain.agents import AgentExecutor

from langchain.agents import create_react_agent

from langchain_core.prompts import ChatPromptTemplate

from langchain.tools import BaseTool, StructuredTool

from langchain.pydantic_v1 import BaseModel, Field

from typing import Type

from textwrap import dedent

dbc_css = "https://cdn.jsdelivr.net/gh/AnnMarieW/dash-bootstrap-templates/dbc.min.css"

app.config.external_stylesheets = [dbc.themes.SUPERHERO, dbc_css]

LLM_ID = "" # Fill in with a valid LLM ID

DATASET_NAME = "pro_customers_sql"

VERSION = "V3"

llm = DKUChatModel(llm_id=LLM_ID, temperature=0)

Tools definition#

Then, you have to define the different tools the application needs.

There are various ways of defining a tool.

The most precise one is based on defining classes that encapsulate the tool.

Alternatively, you can use the @tool annotation or the StructuredTool.from_function function,

but it may require more work when using those tools in a chain.

To define a tool using classes, there are two steps to follow:

Define the interface: which parameter is used by your tool.

Define the code: how the code is executed.

Code 2 shows how to describe a tool using classes. The highlighted lines define the tool’s interface. This simple tool takes a customer ID as an input parameter and runs a query on the SQL Dataset.

class CustomerInfo(BaseModel):

"""Parameter for GetCustomerInfo"""

id: str = Field(description="customer ID")

class GetCustomerInfo(BaseTool):

"""Gathering customer information"""

name: str = "GetCustomerInfo"

description: str = "Provide a name, job title and company of a customer, given the customer's ID"

args_schema: Type[BaseModel] = CustomerInfo

def _run(self, id: str):

dataset = dataiku.Dataset(DATASET_NAME)

table_name = dataset.get_location_info().get('info', {}).get('quotedResolvedTableName')

executor = SQLExecutor2(dataset=dataset)

cid = Constant(str(id))

escaped_cid = toSQL(cid, dialect=Dialects.POSTGRES) # Replace by your DB

query_reader = executor.query_to_iter(

f"""SELECT "name", "job", "company" FROM {table_name} WHERE "id" = {escaped_cid}""")

for (name, job, company) in query_reader.iter_tuples():

return f"The customer's name is \"{name}\", holding the position \"{job}\" at the company named {company}"

return f"No information can be found about the customer {id}"

def _arun(self, id: str):

raise NotImplementedError("This tool does not support async")

Attention

The SQL query might be written differently depending on your SQL Engine.

Similarly, Code 3 shows how to create a tool that searches the Internet for information on a company.

class CompanyInfo(BaseModel):

"""Parameter for the GetCompanyInfo"""

name: str = Field(description="Company's name")

class GetCompanyInfo(BaseTool):

"""Class for gathering in the company information"""

name: str = "GetCompanyInfo"

description: str = "Provide general information about a company, given the company's name."

args_schema: Type[BaseModel] = CompanyInfo

def _run(self, name: str):

results = DDGS().text(name + " (company)", max_results=1)

result = "Information found about " + name + ": " + results[0]["body"] + "\n" \

if len(results) > 0 and "body" in results[0] \

else None

if not result:

results = DDGS().text(name, max_results=1)

result = "Information found about " + name + ": " + results[0]["body"] + "\n" \

if len(results) > 0 and "body" in results[0] \

else "No information can be found about the company " + name

return result

def _arun(self, name: str):

raise NotImplementedError("This tool does not support async")

Code 4 shows how to declare and use these tools.

tools = [GetCustomerInfo(), GetCompanyInfo()]

tool_names = [tool.name for tool in tools]

Once all the tools are defined, you are ready to create your agent. An agent is based on a prompt and uses some tools and an LLM. Code 5 creates an agent and the associated agent executor.

# Initializes the agent

prompt = ChatPromptTemplate.from_template(

"""Answer the following questions as best you can. You have only access to the following tools:

{tools}

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: {input}

Thought:{agent_scratchpad}""")

agent = create_react_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools,

verbose=True, return_intermediate_steps=True, handle_parsing_errors=True)

Creating the Dash application#

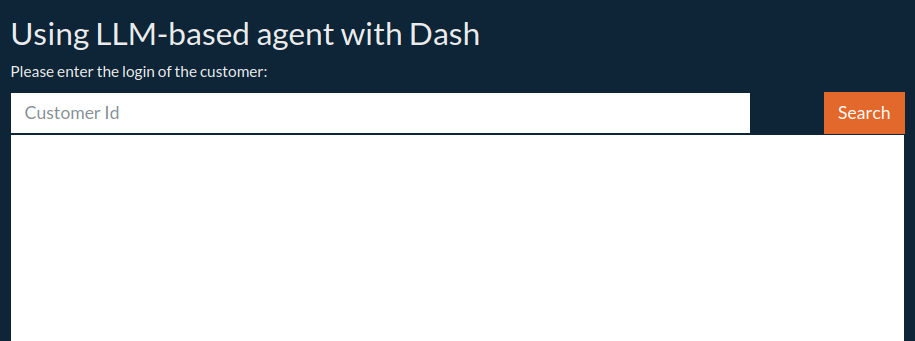

You now have a working agent; let’s build the Dash application. Code 6 creates the Dash layout, which constructs an application like Figure 2, consisting of an input Textbox for entering a customer ID and an output Textarea.

# build your Dash app

v1_layout = html.Div([

dbc.Row([html.H2("Using LLM-based agent with Dash"), ]),

dbc.Row(dbc.Label("Please enter the login of the customer:")),

dbc.Row([

dbc.Col(dbc.Input(id="customer_id", placeholder="Customer Id"), width=10),

dbc.Col(dbc.Button("Search", id="search", color="primary"), width="auto")

], justify="between"),

dbc.Row([dbc.Col(dbc.Textarea(id="result", style={"min-height": "500px"}), width=12)]),

dbc.Toast(

[html.P("Searching for information about the customer", className="mb-0"),

dbc.Spinner(color="primary")],

id="auto-toast",

header="Agent working",

icon="primary",

is_open=False,

style={"position": "fixed", "top": "50%", "left": "50%", "transform": "translate(-50%, -50%)"},

),

dcc.Store(id="step", data=[{"current_step": 0}]),

], className="container-fluid mt-3")

app.layout = v1_layout

Figure 2: Dash layout.#

Now, the only thing to do is connect the button to a function invoking the agent. Code 7 shows how to do it.

def search_V1(customer_id):

"""

Search information about a customer

Args:

customer_id: customer ID

Returns:

the agent result

"""

return agent_executor.invoke({

"input": f"""Give all the professional information you can about the customer with ID: {customer_id}.

Also include information about the company if you can.""",

"tools": tools,

"tool_names": tool_names

})['output']

@app.callback(

Output("result", "value", allow_duplicate=True),

Input("search", "n_clicks"),

State("customer_id", "value"),

prevent_initial_call=True,

running=[(Output("auto-toast", "is_open"), True, False),

(Output("search", "disabled"), True, False)],

)

def search(_, customer_id):

return search_V1(customer_id)

You could also use another way to display the result of the agent, as shown in Code 8.

def search_V2(customer_id):

"""

Search information about a customer

Args:

customer_id: customer ID

Returns:

the agent result

"""

iterator = agent_executor.iter({

"input": f"""Give all the professional information you can about the customer with ID: {customer_id.strip()}.

Also include information about the company if you can.""",

"tools": tools,

"tool_names": tool_names

})

return iterator

@app.callback(

Output("result", "value", allow_duplicate=True),

Input("search", "n_clicks"),

State("customer_id", "value"),

prevent_initial_call=True,

running=[(Output("auto-toast", "is_open"), True, False),

(Output("search", "disabled"), True, False)],

)

def search(_, customer_id):

iterator = list(search_V2(customer_id))

actions = ""

for value in iterator:

if 'intermediate_step' in value:

for action in value['intermediate_step']:

actions = f"{actions}\n{action[0].log}\n{'*' * 80}"

if 'output' in value:

actions = f"{actions}\nFinal Result:\n\n{value['output']}"

return actions

Going further#

You can test different versions of an LLM, let the user decide to use another prompt, or define new tools. In the application’s complete code, you will find another version that incrementally displays an agent’s result.

Here are the complete versions of the code presented in this tutorial:

app.py

from dash import html

from dash import dcc

import dash_bootstrap_components as dbc

from dash.dependencies import Input

from dash.dependencies import Output

from dash.dependencies import State

from dash import no_update

from dash import set_props

import dataiku

from dataiku.langchain.dku_llm import DKUChatModel

from dataiku import SQLExecutor2

from dataiku.sql import Constant, toSQL, Dialects

from duckduckgo_search import DDGS

from langchain.agents import AgentExecutor

from langchain.agents import create_react_agent

from langchain_core.prompts import ChatPromptTemplate

from langchain.tools import BaseTool, StructuredTool

from langchain.pydantic_v1 import BaseModel, Field

from typing import Type

from textwrap import dedent

dbc_css = "https://cdn.jsdelivr.net/gh/AnnMarieW/dash-bootstrap-templates/dbc.min.css"

app.config.external_stylesheets = [dbc.themes.SUPERHERO, dbc_css]

LLM_ID = "" # Fill in with a valid LLM ID

DATASET_NAME = "pro_customers_sql"

VERSION = "V3"

llm = DKUChatModel(llm_id=LLM_ID, temperature=0)

class CustomerInfo(BaseModel):

"""Parameter for GetCustomerInfo"""

id: str = Field(description="customer ID")

class GetCustomerInfo(BaseTool):

"""Gathering customer information"""

name: str = "GetCustomerInfo"

description: str = "Provide a name, job title and company of a customer, given the customer's ID"

args_schema: Type[BaseModel] = CustomerInfo

def _run(self, id: str):

dataset = dataiku.Dataset(DATASET_NAME)

table_name = dataset.get_location_info().get('info', {}).get('quotedResolvedTableName')

executor = SQLExecutor2(dataset=dataset)

cid = Constant(str(id))

escaped_cid = toSQL(cid, dialect=Dialects.POSTGRES) # Replace by your DB

query_reader = executor.query_to_iter(

f"""SELECT "name", "job", "company" FROM {table_name} WHERE "id" = {escaped_cid}""")

for (name, job, company) in query_reader.iter_tuples():

return f"The customer's name is \"{name}\", holding the position \"{job}\" at the company named {company}"

return f"No information can be found about the customer {id}"

def _arun(self, id: str):

raise NotImplementedError("This tool does not support async")

class CompanyInfo(BaseModel):

"""Parameter for the GetCompanyInfo"""

name: str = Field(description="Company's name")

class GetCompanyInfo(BaseTool):

"""Class for gathering in the company information"""

name: str = "GetCompanyInfo"

description: str = "Provide general information about a company, given the company's name."

args_schema: Type[BaseModel] = CompanyInfo

def _run(self, name: str):

results = DDGS().text(name + " (company)", max_results=1)

result = "Information found about " + name + ": " + results[0]["body"] + "\n" \

if len(results) > 0 and "body" in results[0] \

else None

if not result:

results = DDGS().text(name, max_results=1)

result = "Information found about " + name + ": " + results[0]["body"] + "\n" \

if len(results) > 0 and "body" in results[0] \

else "No information can be found about the company " + name

return result

def _arun(self, name: str):

raise NotImplementedError("This tool does not support async")

tools = [GetCustomerInfo(), GetCompanyInfo()]

tool_names = [tool.name for tool in tools]

# Initializes the agent

prompt = ChatPromptTemplate.from_template(

"""Answer the following questions as best you can. You have only access to the following tools:

{tools}

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: {input}

Thought:{agent_scratchpad}""")

agent = create_react_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools,

verbose=True, return_intermediate_steps=True, handle_parsing_errors=True)

# build your Dash app

v1_layout = html.Div([

dbc.Row([html.H2("Using LLM-based agent with Dash"), ]),

dbc.Row(dbc.Label("Please enter the login of the customer:")),

dbc.Row([

dbc.Col(dbc.Input(id="customer_id", placeholder="Customer Id"), width=10),

dbc.Col(dbc.Button("Search", id="search", color="primary"), width="auto")

], justify="between"),

dbc.Row([dbc.Col(dbc.Textarea(id="result", style={"min-height": "500px"}), width=12)]),

dbc.Toast(

[html.P("Searching for information about the customer", className="mb-0"),

dbc.Spinner(color="primary")],

id="auto-toast",

header="Agent working",

icon="primary",

is_open=False,

style={"position": "fixed", "top": "50%", "left": "50%", "transform": "translate(-50%, -50%)"},

),

dcc.Store(id="step", data=[{"current_step": 0}]),

], className="container-fluid mt-3")

app.layout = v1_layout

def search_V1(customer_id):

"""

Search information about a customer

Args:

customer_id: customer ID

Returns:

the agent result

"""

return agent_executor.invoke({

"input": f"""Give all the professional information you can about the customer with ID: {customer_id}.

Also include information about the company if you can.""",

"tools": tools,

"tool_names": tool_names

})['output']

def search_V2(customer_id):

"""

Search information about a customer

Args:

customer_id: customer ID

Returns:

the agent result

"""

iterator = agent_executor.iter({

"input": f"""Give all the professional information you can about the customer with ID: {customer_id.strip()}.

Also include information about the company if you can.""",

"tools": tools,

"tool_names": tool_names

})

return iterator

def search_V3(customer_id):

"""

Search information about a customer

Args:

customer_id: customer ID

Returns:

the agent result

"""

actions = ""

steps = ""

output = ""

iterator = agent_executor.stream({

"input": f"""Give all the professional information you can about the customer with ID: {customer_id.strip()}.

Also include information about the company if you can.""",

"tools": tools,

"tool_names": tool_names

})

for i in iterator:

if "output" in i:

output = i['output']

elif "actions" in i:

for action in i["actions"]:

actions = action.log

elif "steps" in i:

for step in i['steps']:

steps = step.observation

yield [actions, steps, output]

@app.callback(

Output("result", "value", allow_duplicate=True),

Input("search", "n_clicks"),

State("customer_id", "value"),

prevent_initial_call=True,

running=[(Output("auto-toast", "is_open"), True, False),

(Output("search", "disabled"), True, False)],

)

def search(_, customer_id):

if VERSION == "V1":

return search_V1(customer_id)

elif VERSION == "V2":

iterator = list(search_V2(customer_id))

actions = ""

for value in iterator:

if 'intermediate_step' in value:

for action in value['intermediate_step']:

actions = f"{actions}\n{action[0].log}\n{'*' * 80}"

if 'output' in value:

actions = f"{actions}\nFinal Result:\n\n{value['output']}"

return actions

else:

global generator

generator = search_V3(customer_id)

value = next(generator)

set_props("step", {"current_step": 1})

return value[0]

@app.callback(

Output("result", "value", allow_duplicate=True),

Input("step", "current_step"),

State("result", "value"),

prevent_initial_call=True,

running=[(Output("auto-toast", "is_open"), True, False),

(Output("search", "disabled"), True, False)],

)

def next_value(n, old_text):

value = next(generator, None)

if value:

new_text = f"""Action: {dedent(value[0])}

Tool Result: {dedent(value[1])}

## Final Result: {dedent(value[2])}

{'-' * 80}

{old_text}

"""

new_text = dedent(new_text)

set_props("step", {"current_step": n + 1})

return new_text

else:

return no_update