Creating a custom agent#

This tutorial provides clear instructions for developing a custom agent in Dataiku. It builds upon the essential knowledge gained from the Creating and using a Code Agent tutorial, enhancing your skills further. By the conclusion of this guide, you will understand how to effectively package a Code Agent into a Custom Agent, enabling its use across multiple projects.

Custom Agents differ significantly from project-specific Code Agents: they function independently and are not linked to specific projects, datasets, or tools. This autonomy allows for more versatile applications but also requires thorough attention to the code environment and its associated dependencies.

Prerequisites#

Dataiku >= 13.4

Develop plugins permission

A connection to an LLM, preferably an OpenAI connection

Python >= 3.9

Introduction#

This tutorial starts with the Creating and using a Code Agent tutorial. You will learn how to package this Code Agent into a Custom agent, allowing the user to use it across all projects where you may need this processing. A custom agent is a broader version of a code agent in the sense that this agent is not tied to a project. So, you should create an autonomous agent. It is not tied to a project, so you can not rely on deployed tools; you should embed them if you need some tool. Moreover, you can not rely on a particular Dataset (or other Dataiku objects) for the same reason. As it may be executed in a context other than the one used at design time, you should also pay attention to the code env of the plugin.

To develop a custom agent, you must first create a plugin.

Go to the main menu, click the Plugins menu, and select the Write your own from the Add plugin button.

Then, choose a meaningful name, such as “toolbox.”

Once the plugin is created, click the Create a code environment button and select Python as the default language.

In the requirements.txt file (located in toolbox/code-env/python/spec),

add the following requirements:

duckduckgo_search

langchain_core

langchain

Once you have saved the modification, go to the Summary tabs to build the plugin code environment. The custom agent plugin will use this code environment when the agent is used.

Click the + New component button, and choose the Agent component in the provided list, as shown in Figure 1. Then, fill out the form by choosing a meaningful Identifier, select the “Minimal agent template” as a Starter code, and click the Add button.

Figure 1: New agent component.#

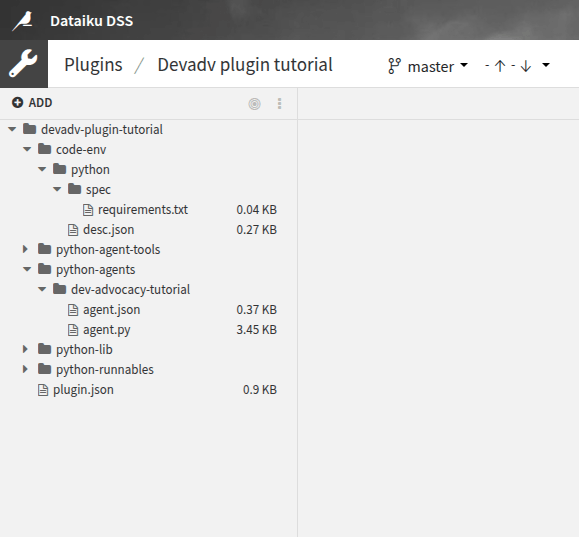

Alternatively, you can select the Edit tab and, under the toolbox directory,

create a folder named python-agents.

This directory is where you will find and create your custom agent.

Under this directory, create a directory with a meaningful name representing your agent’s name.

Creating the agent#

Analyzing the tools#

The tutorial Creating and using a Code Agent uses two tools (Get Customer Info and Get Company Info) to retrieve information about a user given their ID and a company given its name. The Get Company Info uses the DuckDuckGo Python package to search the Internet for information about a company. So, the only requirement for this tool is to have the Python packages in the plugin code env.

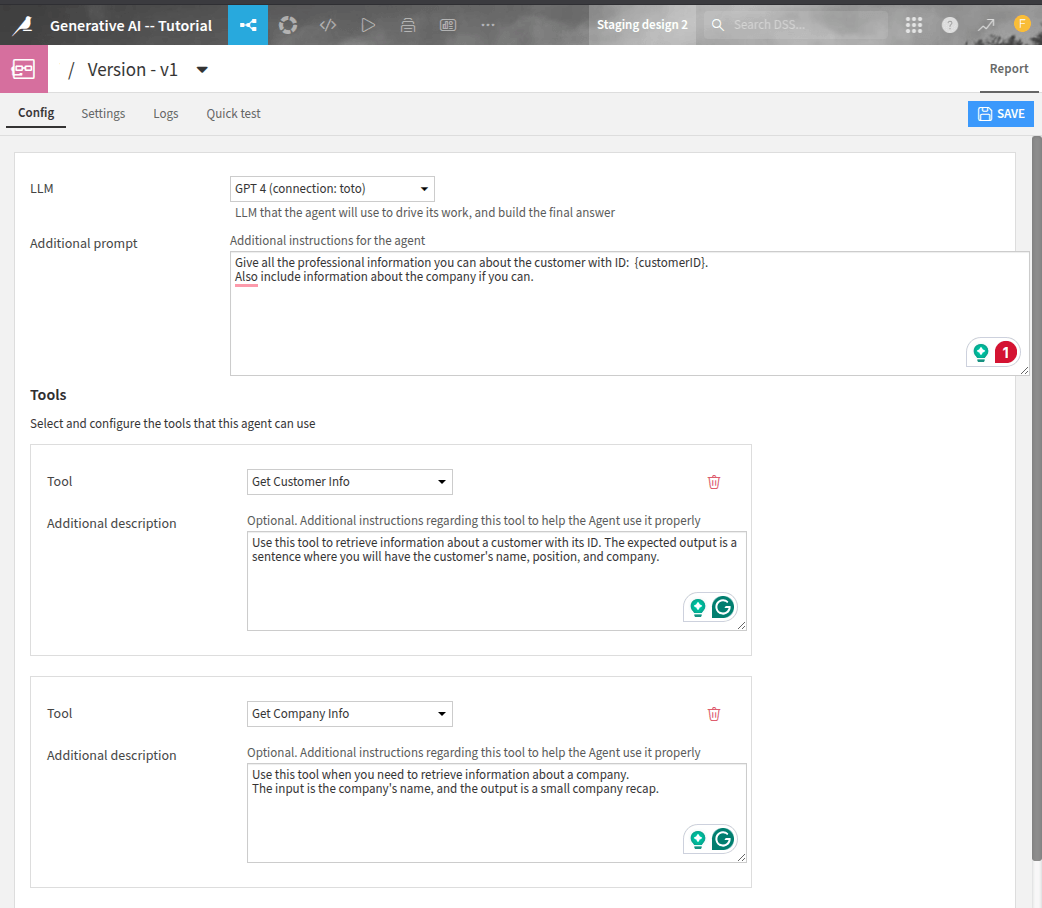

The Get Customer Info relies on a dataset to find information on a user given her ID.

As you cannot know the dataset’s name in advance, you must ask the user its name.

This can be done in the Config section of an instantiated custom agent,

as shown in Figure 2.

To ask the user for a particular dataset, specify it in the agent.json file,

as shown in Code 1 (highlighted lines).

Figure 2: Configuration of an agent.#

agent.json#{

"meta": {

"label": "Custom agent tutorial",

"description": "DevAdvocacy -- Custom Agent Tutorial",

"icon": "fas fa-magic"

},

"supportsImageInputs": false,

"params": [

{

"name": "dataset_name",

"label": "Customer SQL Dataset",

"type": "DATASET",

"mandatory": true

}

]

}

Declaring the tools#

Once you have analyzed the plugin’s requirements, you can start coding. The easiest thing to do is create the tool Get Company Info; there is nothing more to do than the usual work for creating a tool, as shown in Code 2. Highlighted lines show the classes and variables you should use to be able to run this tutorial.

from langchain import hub

from langchain.agents import AgentExecutor

from langchain.agents import create_openai_tools_agent

from langchain.tools import tool

from dataiku.llm.python import BaseLLM

from dataiku.langchain.dku_llm import DKUChatModel

from dataiku.langchain import LangchainToDKUTracer

import dataiku

from dataiku import SQLExecutor2

from duckduckgo_search import DDGS

from dataiku.sql import Constant, toSQL, Dialects

OPENAI_CONNECTION_NAME = "REPLACE_WITH_YOUR_CONNECTION_NAME"

model = DKUChatModel(llm_id=f"openai:{OPENAI_CONNECTION_NAME}:gpt-4o-mini")

@tool

def search_company_info(company_name: str) -> str:

"""

Use this tool when you need to retrieve information on a company.

The input of this tool is the company name.

The output is either a small recap of the company or "No information …"

meaning that we couldn't find information # about this company

"""

with DDGS() as ddgs:

results = list(ddgs.text(f"{company_name} (company)", max_results=1))

if results:

return f"Information found about {company_name}: {results[0]['body']}"

return f"No information found about {company_name}"

Coding a custom agent with a parametrized tool requires minor plumbing.

When using a tool, the LLM provides information to the tool by feeding its inputs.

If the tool has several parameters, the LLM will provide input for all the parameters.

But you do not want the LLM to fill the dataset name.

So, this name should not be a parameter of the tool.

If you include the tool in the class handling the agent (see next section for more details on this class),

you must add the self parameter.

As previously explained, self should not be a parameter for the same reason.

Thus, you can not easily embed the tool in the agent’s class.

An easy way to overcome these difficulties is to create a function to create the already parametrized tool,

as shown in Code 3.

Attention

The SQL query might be written differently depending on your SQL Engine.

def generate_get_customer(dataset_name: str):

@tool

def get_customer(customer_id: str) -> str:

"""Get customer name, position and company information from database.

The input is a customer id (stored as a string).

The ouput is a string of the form:

"The customer's name is \"{name}\", holding the position \"{job}\" at the company named {company}"

"""

dataset = dataiku.Dataset(dataset_name)

table_name = dataset.get_location_info().get('info', {}).get('quotedResolvedTableName')

executor = SQLExecutor2(dataset=dataset)

cid = Constant(str(customer_id))

escaped_cid = toSQL(cid, dialect=Dialects.POSTGRES) # Replace by your DB

query_reader = executor.query_to_iter(

f"""SELECT "name", "job", "company" FROM {table_name} WHERE "id" = {escaped_cid}""")

for (name, job, company) in query_reader.iter_tuples():

return f"The customer's name is \"{name}\", holding the position \"{job}\" at the company named {company}"

return f"No information can be found about the customer {customer_id}"

return get_customer

Coding the agent#

Once the tools are created, you can focus on using these tools in your custom agent.

To create a custom agent, you must create a Python class that inherits from the BaseLLM class.

You also must define a set_config function to deal with the function’s parameter (if you use parameters).

Moreover, you will also need a function to make the processing.

Code 4 shows the full implementation of the class.

See also

If you need help on coding an agent, you will find some explanations in the Agent section in Concepts and examples, or you can follow this tutorial.

tools = [search_company_info]

class MyLLM(BaseLLM):

def __init__(self):

pass

def set_config(self, config, plugin_config):

self.config = config

self.dataset = config.get("dataset_name")

tools.append(generate_get_customer(dataset_name=self.dataset))

self.agent = create_openai_tools_agent(model.with_config({"tags": ["agent_llm"]}), tools,

hub.pull("hwchase17/openai-tools-agent"))

async def aprocess_stream(self, query: SingleCompletionQuery, settings: CompletionSettings,

trace: SpanBuilder) -> CompletionResponse:

prompt = query["messages"][0]["content"]

tracer = LangchainToDKUTracer(dku_trace=trace)

agent_executor = AgentExecutor(agent=self.agent, tools=tools)

async for event in agent_executor.astream_events({"input": prompt}, version="v2",

config={"callbacks": [tracer]}):

kind = event["event"]

if kind == "on_chat_model_stream":

content = event["data"]["chunk"].content

if content:

yield {"chunk": {"text": content}}

elif kind == "on_tool_start":

# Event chunks are not part of the answer itself,

# but can provide progress information

yield {"chunk": {"type": "event", "eventKind": "tool_call",

"eventData": {"name": event["name"]}}}

Using the agent#

Once the custom agent has been coded, you can use it like any other agent. For more information, please refer to the Using agents documentation. If you want to use your custom agent, you should create a code agent by selecting the custom agent.

To list all agents that have been defined in a project,

you can use the list_llms() and search for your agent.

import dataiku

client = dataiku.api_client()

project = client.get_default_project()

llm_list = project.list_llms()

for llm in llm_list:

print(f"- {llm.description} (id: {llm.id})")

Running this code snippet will provide a list of all LLMs defined in the project. You should see your agent in this list:

- Agent - test guard (id: agent:2V8SR72P)

- Agent - useCase4 (id: agent:44pVmkUi)

- Agent - code (id: agent:4agXpWVO)

- Agent - useCase5 (id: agent:GYrrlSns)

- Agent - useCase1 (id: agent:IWX5i2Zh)

- Agent - Visual (id: agent:Njgq8s9j)

- Agent - Tutorial (id: agent:QRfIU99Z)

- Agent - useCase2 (id: agent:aolFEv0W)

- Agent - useCase3 (id: agent:hMRqhPVk)

- Agent - Custom Agent tutorial (id: agent:iXqDRS08)

Once you know the agent’s ID, you can use it to call the agent, as shown in the code below:

CODE_AGENT_ID = "agent:iXqDRS08"

llm = project.get_llm(CODE_AGENT_ID)

completion = llm.new_completion()

completion.with_message('Give all the professional information you can about the customer with ID: fdouetteau. Also include information about the company if you can.')

resp = completion.execute()

resp.text

"The customer's name is Florian Douetteau, and he holds the position of CEO at the company named Dataiku.\n\n### Company Information:\n - Company Name: Dataikun- Overview: Dataiku is a global software company that specializes in machine learning and artificial intelligence. Founded in 2013, Dataiku aims to make data science accessible to everyone, helping businesses unlock the potential of artificial intelligence. Florian Douetteau, as a co-founder and CEO, has been instrumental in guiding the company's vision and operations."

Wrapping up#

Congratulations on finishing the tutorial for creating a custom agent in Dataiku. You have effectively learned how to convert a Code Agent into a Custom Agent, a versatile tool for diverse projects. By adhering to the steps in this tutorial, you have acquired the essential skills to develop a plugin, set up the required code environment, and implement the agent’s functionality.

Custom Agents need thoughtful planning to guarantee they operate efficiently in various contexts. With this newfound knowledge, you can enhance your Dataiku projects using adaptable and reusable Custom Agents.

Here is the complete code of the custom agent:

agent.json

{

"meta": {

"label": "Custom agent tutorial",

"description": "DevAdvocacy -- Custom Agent Tutorial",

"icon": "fas fa-magic"

},

"supportsImageInputs": false,

"params": [

{

"name": "dataset_name",

"label": "Customer SQL Dataset",

"type": "DATASET",

"mandatory": true

}

]

}

Attention

The SQL query might be written differently depending on your SQL Engine.

agent.py

from langchain import hub

from langchain.agents import AgentExecutor

from langchain.agents import create_openai_tools_agent

from langchain.tools import tool

from dataiku.llm.python import BaseLLM

from dataiku.langchain.dku_llm import DKUChatModel

from dataiku.langchain import LangchainToDKUTracer

import dataiku

from dataiku import SQLExecutor2

from duckduckgo_search import DDGS

from dataiku.sql import Constant, toSQL, Dialects

OPENAI_CONNECTION_NAME = "REPLACE_WITH_YOUR_CONNECTION_NAME"

model = DKUChatModel(llm_id=f"openai:{OPENAI_CONNECTION_NAME}:gpt-4o-mini")

def generate_get_customer(dataset_name: str):

@tool

def get_customer(customer_id: str) -> str:

"""Get customer name, position and company information from database.

The input is a customer id (stored as a string).

The ouput is a string of the form:

"The customer's name is \"{name}\", holding the position \"{job}\" at the company named {company}"

"""

dataset = dataiku.Dataset(dataset_name)

table_name = dataset.get_location_info().get('info', {}).get('quotedResolvedTableName')

executor = SQLExecutor2(dataset=dataset)

cid = Constant(str(customer_id))

escaped_cid = toSQL(cid, dialect=Dialects.POSTGRES) # Replace by your DB

query_reader = executor.query_to_iter(

f"""SELECT "name", "job", "company" FROM {table_name} WHERE "id" = {escaped_cid}""")

for (name, job, company) in query_reader.iter_tuples():

return f"The customer's name is \"{name}\", holding the position \"{job}\" at the company named {company}"

return f"No information can be found about the customer {customer_id}"

return get_customer

@tool

def search_company_info(company_name: str) -> str:

"""

Use this tool when you need to retrieve information on a company.

The input of this tool is the company name.

The output is either a small recap of the company or "No information …"

meaning that we couldn't find information # about this company

"""

with DDGS() as ddgs:

results = list(ddgs.text(f"{company_name} (company)", max_results=1))

if results:

return f"Information found about {company_name}: {results[0]['body']}"

return f"No information found about {company_name}"

tools = [search_company_info]

class MyLLM(BaseLLM):

def __init__(self):

pass

def set_config(self, config, plugin_config):

self.config = config

self.dataset = config.get("dataset_name")

tools.append(generate_get_customer(dataset_name=self.dataset))

self.agent = create_openai_tools_agent(model.with_config({"tags": ["agent_llm"]}), tools,

hub.pull("hwchase17/openai-tools-agent"))

async def aprocess_stream(self, query: SingleCompletionQuery, settings: CompletionSettings,

trace: SpanBuilder) -> CompletionResponse:

prompt = query["messages"][0]["content"]

tracer = LangchainToDKUTracer(dku_trace=trace)

agent_executor = AgentExecutor(agent=self.agent, tools=tools)

async for event in agent_executor.astream_events({"input": prompt}, version="v2",

config={"callbacks": [tracer]}):

kind = event["event"]

if kind == "on_chat_model_stream":

content = event["data"]["chunk"].content

if content:

yield {"chunk": {"text": content}}

elif kind == "on_tool_start":

# Event chunks are not part of the answer itself,

# but can provide progress information

yield {"chunk": {"type": "event", "eventKind": "tool_call",

"eventData": {"name": event["name"]}}}