Querying the LLM from an headless API#

In this tutorial, you will learn how to build an API from a web application’s backend (called headless API) and how to use it from code. You will use the LLM Mesh to query an LLM and serving the response.

You can use a headless web application to create an API endpoint for a particular purpose that doesn’t fit well in the API Node. For example, you may encounter this need if you want to use an SQLExecutor, access datasets, etc. The API node is still the recommended deployment for real-time inference and all use cases for which API-Node had been designed (see this documentation for more information about API-Node)

Prerequisites#

Dataiku >= 13.1

Dataiku >= 14.1

Dataiku >= 13.1

A code environment with

dash.

Building the server#

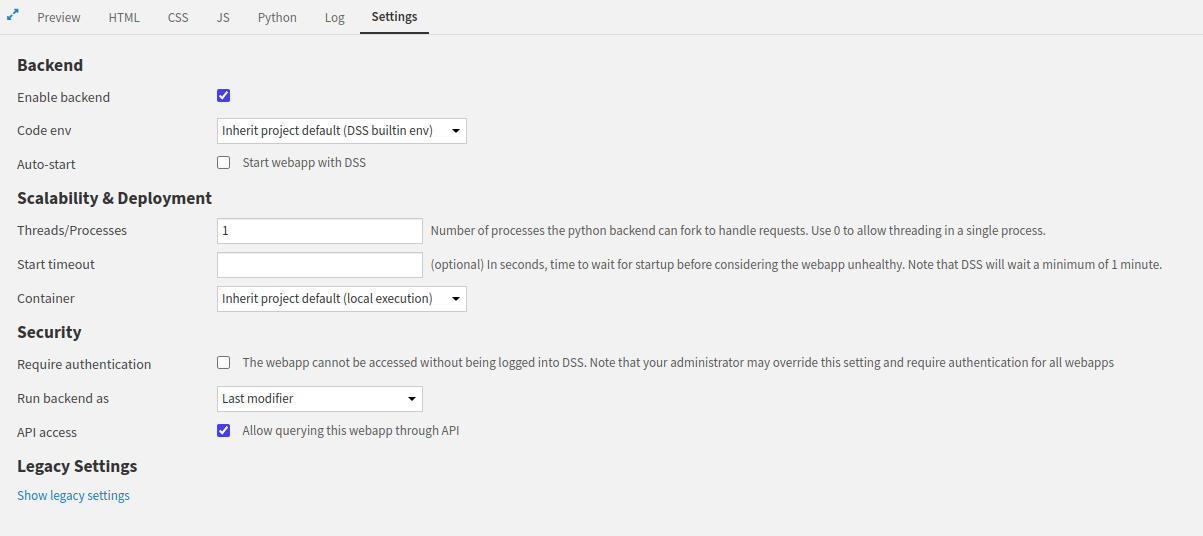

The first step is to define the routes you want your API to handle. A single route is responsible for a (simple) process. Dataiku provides an easy way to describe those routes. Relying on a Flask or FastAPI server helps you return the desired resource types. Check the API access in the web apps’ settings to use this functionality, as shown in Figure 1.

Figure 1: Enabling API access.#

This tutorial relies on a single route (query) to query an LLM.

As the message sent to the LLM could be very long, you can not consider passing the message in the URL.

So, you will have to send the message in the body of the request.

For this, GET method is not the recommended way, as it is not suppose to have a body.

You will use the POST method to send the message in a JSON body associated to the request.

Once the query comes to the server, the server will extract the message, send it to the LLM and return the response to the user.

Before accessing to the body, you have to check if the Content-type is well defined.

Then, you must extract the message from the body.

Send the message to the LLM, and if querying the LLM is successful return the response to the user.

Highlighted lines in Code1 show how to do.

The remaining lines show how to set up the whole application.

import dataiku

from flask import request, make_response

LLM_ID = "openai:openai:gpt-3.5-turbo"

llm = dataiku.api_client().get_default_project().get_llm(LLM_ID)

@app.route('/query', methods=['POST'])

def query():

content_type = request.headers.get('Content-Type')

if content_type == 'application/json':

json = request.json

user_message = json.get('message', None)

if user_message:

completion = llm.new_completion()

completion.with_message(user_message)

resp = completion.execute()

if resp.success:

msg = resp.text

else:

msg = "Something went wrong"

else:

msg = "No message was found"

response = make_response(msg)

response.headers['Content-type'] = 'application/json'

return response

else:

return 'Content-Type is not supported!'

import dataiku

from fastapi import Request, Response

from fastapi.responses import JSONResponse

LLM_ID = "openai:openai:gpt-3.5-turbo"

llm = dataiku.api_client().get_default_project().get_llm(LLM_ID)

@app.post("/query")

async def query(request: Request):

content_type = request.headers.get("content-type")

if content_type == "application/json":

json_data = await request.json()

user_message = json_data.get("message")

if user_message:

completion = llm.new_completion()

completion.with_message(user_message)

resp = completion.execute()

msg = resp.text if resp.success else "Something went wrong"

else:

msg = "No message was found"

return JSONResponse(content={"message": msg})

else:

return Response(content="Content-Type is not supported!", media_type="text/plain")

from dash import html

import dataiku

from flask import request, make_response

LLM_ID = "openai:openai:gpt-3.5-turbo"

llm = dataiku.api_client().get_default_project().get_llm(LLM_ID)

@app.server.route('/query', methods=['POST'])

def query():

content_type = request.headers.get('Content-Type')

if content_type == 'application/json':

json = request.json

user_message = json.get('message', None)

if user_message:

completion = llm.new_completion()

completion.with_message(user_message)

resp = completion.execute()

if resp.success:

msg = resp.text

else:

msg = "Something went wrong"

else:

msg = "No message was found"

response = make_response(msg)

response.headers['Content-type'] = 'application/json'

return response

else:

return 'Content-Type is not supported!'

# We need to have a layout (even if we don't use it)

# In case we don't set a layout dash application won't start

app.layout = html.Div("HeadLess WebAPP. No Interface")

Querying the API#

To access the headless API, you must be logged on to the instance or have an API key that identifies you. If you need help setting up an API key, please read this tutorial. Then, there are several different ways to interact with a headless API.

Using cUrl requires an API key to access the headless API or an equivalent way of authenticating,

depending on the authentication method set on the Dataiku instance.

Once you have this API key, you can access the API endpoint with the following command.

The WEBAPP_ID is the first eight characters (before the underscore) in the webapp URL.

For example, if the webapp URL in Dataiku is /projects/HEADLESS/webapps/kUDF1mQ_api/view, the WEBAPP_ID is

kUDF1mQ and the PROJECT_KEY is HEADLESS.

cUrl command to fetch data#curl -X POST --header 'Authorization: Bearer <USE_YOUR_API_KEY>' \

'http://<DATAIKU_ADDRESS>:<DATAIKU_PORT>/web-apps-backends/<PROJECT_KEY>/<WEBAPP_ID>/query' \

--header 'Content-Type: application/json' \

--data '{

"message": "Write an haiku on boardgames"

}'

You can access the headless API using the Python API.

Use the dataikuapi or the dataiku package, to create a dataikuapi.DSSClient, as shown in Code 3.

import dataiku, dataikuapi

API_KEY=""

DATAIKU_LOCATION = "" #http(s)://DATAIKU_HOST:DATAIKU_PORT

PROJECT_KEY = ""

WEBAPP_ID = ""

# Depending on your case, use one of the following

#client = dataikuapi.DSSClient(DATAIKU_LOCATION, API_KEY)

client = dataiku.api_client()

project = client.get_project(PROJECT_KEY)

webapp = project.get_webapp(WEBAPP_ID)

backend = webapp.get_backend_client()

backend.session.headers['Content-Type'] = 'application/json'

# Query the LLM

print(backend.session.post(backend.base_url + '/query', json={'message':'Coucou'}).text)

Wrapping up#

If you need to give access to unauthenticated users, you can turn your web application into a public one, as this documentation suggests. Now that you understand how to turn a web application into a headless one, you can create an agent-headless API.